Politically speaking the internet is now one of the major battlegrounds in the ongoing conflict of ideologies. Social media erupted into election fever recently, as well as the infamous backlash that followed the results. However, some have said that certain topics, which are apparently important, are often absent from news feeds.

Facebook has been blamed for a lack of diversity before, but now the site has published an in depth study of the issue. According to some, algorithms that display certain content apparently don’t seem to have time for more sensitive issues, but Facebook’s study also says the content you see is far more to do with the people you add as friends.

SEE ALSO: CHIP the tiny computer you can pre-order for $9.00

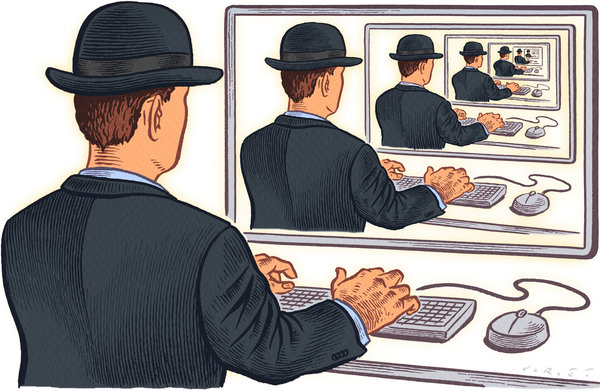

The so called practice of forming an ‘echo chamber’ online has been levelled at other social media sites, most notably Tumblr and Reddit, for the last few years. An echo chamber forms when large segments of certain like minded individuals set the tone for an entire community, often shouting down people with different opinions to maintain the status quo.

The viewpoint of the casual observer when faced with Tumblr and Reddit is that both sites attract a certain clientèle. Both are anonymous, both offer user interactions, but the communities online often speak for one side of an issue. Tumblr’s more progressive liberal ‘social justice’ often clashes with Reddit’s libertarian/traditionalist viewpoints.

While both represent two branches of politics, left/right, liberal/conservative, neither are prone to widespread diversity. Facebook is a different breed of network however – it’s not anonymous, it’s far bigger, and people of every school of thought can be found there.

The study concluded that on Facebook if an echo chamber is created it’s due to the connections one makes. Studying the self proclaimed political left and right on Facebook, respondents showed a reasonable 25%-30% of posts and friends differed along important viewpoints. Potentially one in four posts you see may challenge your views regardless of what you believe, which seems like a comfortable amount.

SEE ALSO: Google Play for Android Introduces Pre-Register Option

Facebook’s study seems to have squashed the complaints that their algorithm was to blame – it boils down to similar points of view being shared among friends. In real life people usually believe in the same things as their friends, and Facebook’s lack of anonymity seems to make the same rules apply online.

Edgier social networks are edgy for a reason. You’re more likely to find hardline members of political movements within – and that’s okay, but it often means as a user you need a much thicker skin to survive in the echo chamber.